- #Multiprocessing python queue how to#

- #Multiprocessing python queue code#

- #Multiprocessing python queue windows#

Perhaps you do some system checks when the program runs. It may not look like much, but imagine you have some computationally expensive initialization task. Is not going to be frozen to produce an executable. The "freeze_support()" line can be omitted if the program This probably means that you are not using fork to start yourĬhild processes and you have forgotten to use the proper idiom On Windows, it produces a very long error, that finishes with: RuntimeError:Īn attempt has been made to start a new process before theĬurrent process has finished its bootstrapping phase. As you may have noticed, this could have been much worse if we wouldn't include the if _main_ at the end of the file, let's check it out.

#Multiprocessing python queue code#

It explains why, if we run the code on Windows, we get twice the line Before defining simple_func. It means that a new interpreter starts and the code reruns.

#Multiprocessing python queue windows#

On Windows (and by default on Mac), however, processes are Spawned. It means that the child process inherits the memory state of the parent process. On Linux, when you start a child process, it is Forked. It does not look like much, except for the second Before defining simple_func, and this difference is crucial. While on Linux we get the following output: Before defining simple_func If we run this code on Windows, we get the following output: Before defining simple_func Let's go to the core of the problem at hand by studying how this code behaves: import multiprocessing as mp Which outputs the following: Waiting for simple func to end

#Multiprocessing python queue how to#

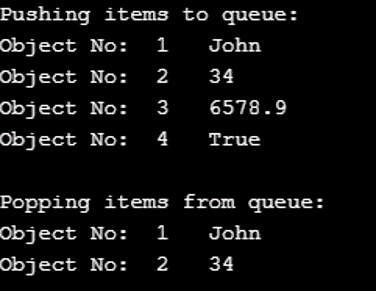

The quickest way of showing how to use multiprocessing is to run a simple function without blocking the main program: import multiprocessing as mp Let's quickly see how multiprocessing works and where Windows and Linux diverge. When I started working with multiprocessing, I was unaware of the differences between Windows and Linux, which set me back several weeks of development time on a relatively big project. Multiprocessing is an excellent package if you ever want to speed up your code without leaving Python. Note that the function g does strictly more jobs than f, but its answers are much shorter.Differences of Multiprocessing on Windows and Linux def f(n):įrom multiprocessing import Process, Queue Following the suggestion of I attached my code with an example. I would like to know if there is any hidden parameter to prevent the Python MultiProcessing module from producing long outputs in Sagemath. For the same computation, once I add a line to manually set the output to be something short, or extract a small part of the original output, then the computation no longer gets stck in the end, and that small part agrees with the original answer. What puzzles me is that the problem depends on the length of output, not the time of computation. However, the output still does not appear. I monitored the CPU usage, it peaks at first and then returns to zero, which means that the computation is complete. SageMath gets stuck after the computation if the output is long. When using MultiProcessing module for the same problem with the same input, everything works fine for short output, but there is a problem when the output is long. When using paralell decoration, everything works fine. I am using SageMath 9.0 and I tried to do paralle computation in two waysġ) parallel decoration built in SageMath

0 kommentar(er)

0 kommentar(er)